Proton Earth, Electron Moon

What if the Earth were made entirely of protons, and the Moon were made entirely of electrons?

—Noah Williams

This is, by far, the most destructive What-If scenario to date.

You might imagine an electron Moon orbiting a proton Earth, sort of like a gigantic hydrogen atom. On one level, it makes a kind of sense; after all, electrons orbit protons, and moons orbit planets. In fact, a planetary model of the atom was briefly popular (although it turned out not to be very useful for understanding atoms.[1]This model was (mostly) obsolete by the 1920s, but lived on in an elaborate foam-and-pipe-cleaner diorama I made in 6th grade science class.)

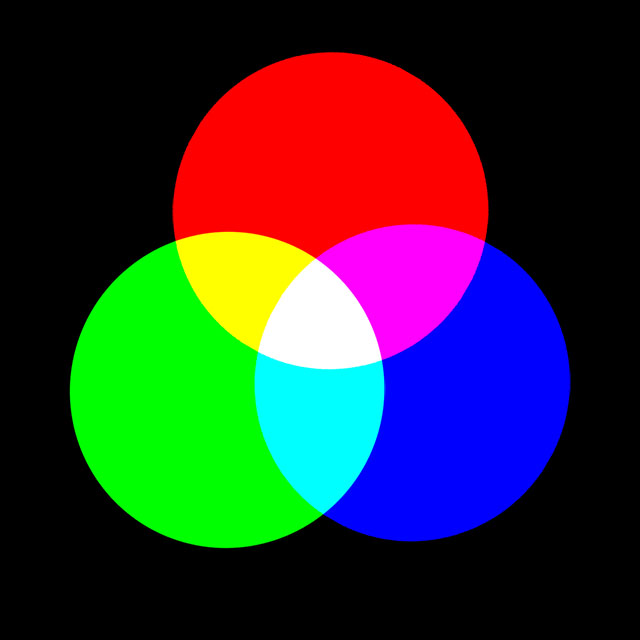

If you put two electrons together, they try to fly apart. Electrons are positively charged, and the force of repulsion from this charge is about 20 orders of magnitude stronger than the force of gravity pulling them together.

If you put 1052 electrons together—to build a Moon—they push each other apart really hard. In fact, they push each other apart so hard, each electron would be shoved away with an unbelievable amount of energy.

It turns out that, for the proton Earth and electron Moon in Noah's scenario, the planetary model is even more wrong than usual. The Moon wouldn't orbit the Earth because they'd barely have a chance to influence each other;[2]I interpreted the question to mean that the Moon was replaced with a sphere of electrons the size and mass of the Moon, and ditto for the Earth. There are other interpretations, but practically speaking the end result is the same. the forces trying to blow each one apart would be far more powerful than any attractive force between the two.

If we ignore general relativity for a moment—we'll come back to it—we can calculate that the energy from these electrons all pushing on each other would be enough to accelerate all of them outward at near the speed of light.[3]But not past it; we're ignoring general relativity, but not special relativity. Accelerating particles to those speeds isn't unusual; a desktop particle accelerator can accelerate electrons to a reasonable fraction of the speed of light. But the electrons in Noah's Moon would each be carrying much, much more energy than those in a normal accelerator—orders of magnitude more than the Planck energy, which is itself many orders of magnitude larger than the energies we can reach in our largest accelerators. In other words, Noah's question takes us pretty far outside normal physics, into the highly theoretical realm of things like quantum gravity and string theory.

So I contacted Dr. Cindy Keeler, a string theorist with the Niels Bohr Institute. I explained Noah's scenario, and she was kind enough to offer some thoughts.

![[20 years later] Ok, while all theories are consistent with those observations, half of them would NOT be consistent with these alternate ones. So in some alternate universes, we could definitely rule these ones out.](/imgs/a/140/help.png)

Dr. Keeler agreed that we shouldn't rely on any calculations that involve putting that much energy in each electron, since it's so far beyond what we're able to test in our accelerators. "I don't trust anything with energy per particle over the Planck scale. The most energy we've really observed is in cosmic rays; more than LHC by circa 106, I think, but still not close to the Planck energy. Being a string theorist, I'm tempted to say something stringy would happen—but the truth is we just don't know."

Luckily, that's not the end of the story. Remember how we're ignoring general relativity? Well, this is one of the very, very rare situations where bringing in general relativity makes a problem easier to solve.

There's a huge amount of potential energy in this scenario—the energy that we imagined would blast all these electrons apart. That energy warps space and time just like mass does.[4]If we let the energy blast the electrons apart at near the speed of light, we'd see that energy actually take the form of mass, as the electrons gained mass relativistically. That is, until something stringy happened. The amount of energy in our electron Moon, it turns out, is about equal to the total mass and energy of the entire visible universe.

An entire universe worth of mass-energy—concentrated into the space of our (relatively small) Moon—would warp space-time so strongly that it would overpower even the repulsion of those 1052 electrons.

Dr. Keeler's diagnosis: "Yup, black hole." But this is no an ordinary black hole; it's a black hole with a lot of electric charge.[5]The proton Earth, which would also be part of this black hole, would reduce the charge, but since an Earth-mass of protons has much less charge than a Moon-mass of electrons, it doesn't affect the result much. And for that, you need a different set of equations—rather than the standard Schwarzschild equations, you need the Reissner–Nordström ones.

In a sense, the Reissner-Nordström equations compare the outward force of the charge to the inward pull of gravity. If the outward push from the charge is large enough, it's possible the event horizon surrounding the black hole can disappear completely. That would leave behind an infinitely-dense object from which light can escape—a naked singularity.

Once you have a naked singularity, physics starts breaking down in very big ways. Quantum mechanics and general relativity give absurd answers, and they're not even the same absurd answers. Some people have argued that the laws of physics don't allow that kind of situation to arise. As Dr. Keeler put it, "Nobody likes a naked singularity."

In the case of an electron Moon, the energy from all those electrons pushing on each other is so large that the gravitational pull wins, and our singularity would form a normal black hole. At least, "normal" in some sense; it would be a black hole as massive as the observable universe.[6]A black hole with the mass of the observable universe would have a radius of 13.8 billion light-years, and the universe is 13.8 billion years old, which has led some people to say "the Universe is a black hole!" (It's not.)

Would this black hole cause the universe to collapse? Hard to say. The answer depends on what the deal with dark energy is, and nobody knows what the deal with dark energy is.

But for now, at least, nearby galaxies would be safe. Since the gravitational influence of the black hole can only expand outward at the speed of light, much of the universe around us would remain blissfully unaware of our ridiculous electron experiment.

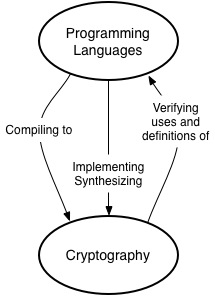

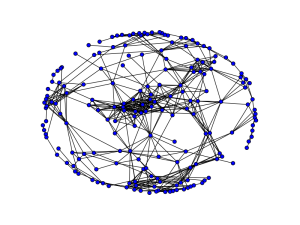

In this post, I interview

In this post, I interview

![\left[\begin{array}{c} x_{n+1} \\\ x_{n} \end{array}\right] = \left[\begin{array}{cc} 1 & 1 \\\ 1 & 0 \end{array}\right] \left[\begin{array}{c} x_{n} \\\ x_{n-1} \end{array}\right]](http://www.johndcook.com/fibonacci_matrix.png)

![\left[\begin{array}{ccc} 1 & 1 & 1 \\\ 1 & 0 & 0 \\\ 0 & 1 & 0 \end{array}\right]](http://www.johndcook.com/tribonacci_matrix.png)

Sameer: I completed by bachelors in Computer Science at Cornell during 1994-1998, and completed a Masters & PhD at MIT between 1998-2004. My advisor at MIT was

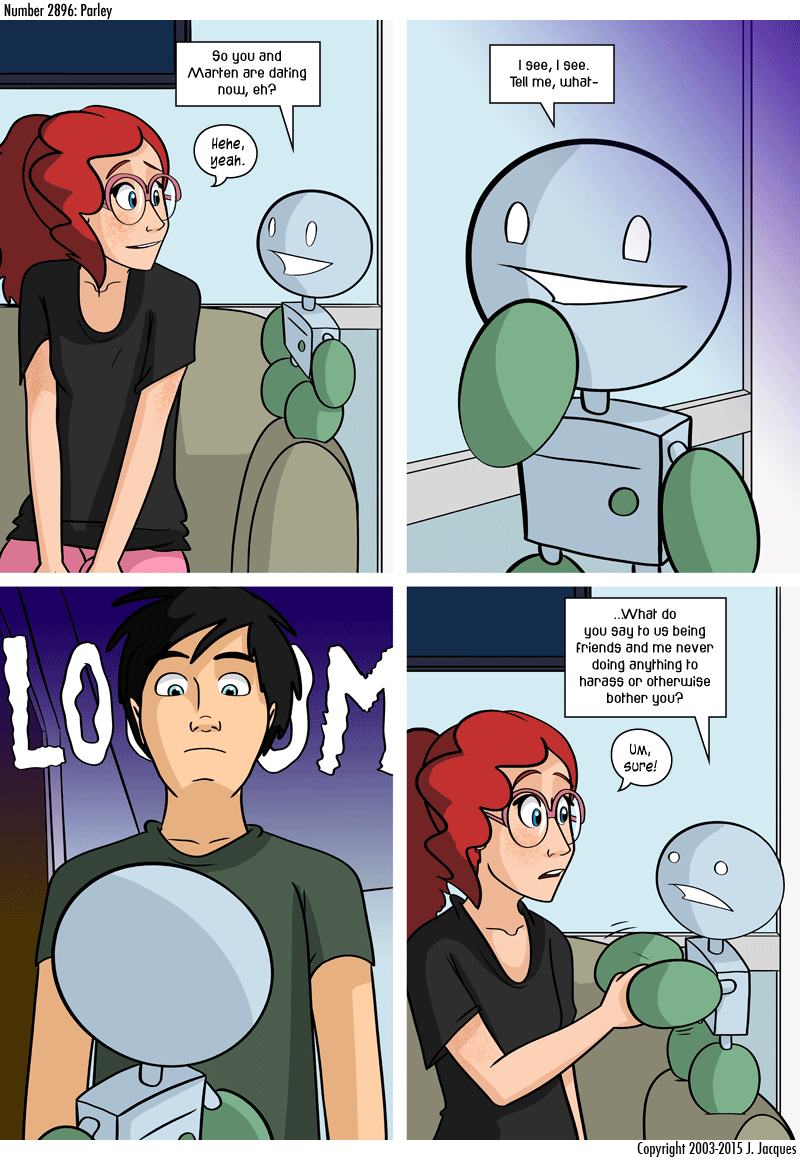

Sameer: I completed by bachelors in Computer Science at Cornell during 1994-1998, and completed a Masters & PhD at MIT between 1998-2004. My advisor at MIT was